Edge AI refers to running artificial intelligence algorithms locally on edge devices (near the data source) instead of exclusively in cloud data centers

. By processing data on devices like sensors, smartphones, cameras, or edge servers, Edge AI reduces latency and bandwidth use, enhances privacy, and enables real-time decision-making even without constant cloud connectivity. This report provides a high-level overview of Edge AI trends through 2026, covering market growth, technological advances, key applications, and future prospects.

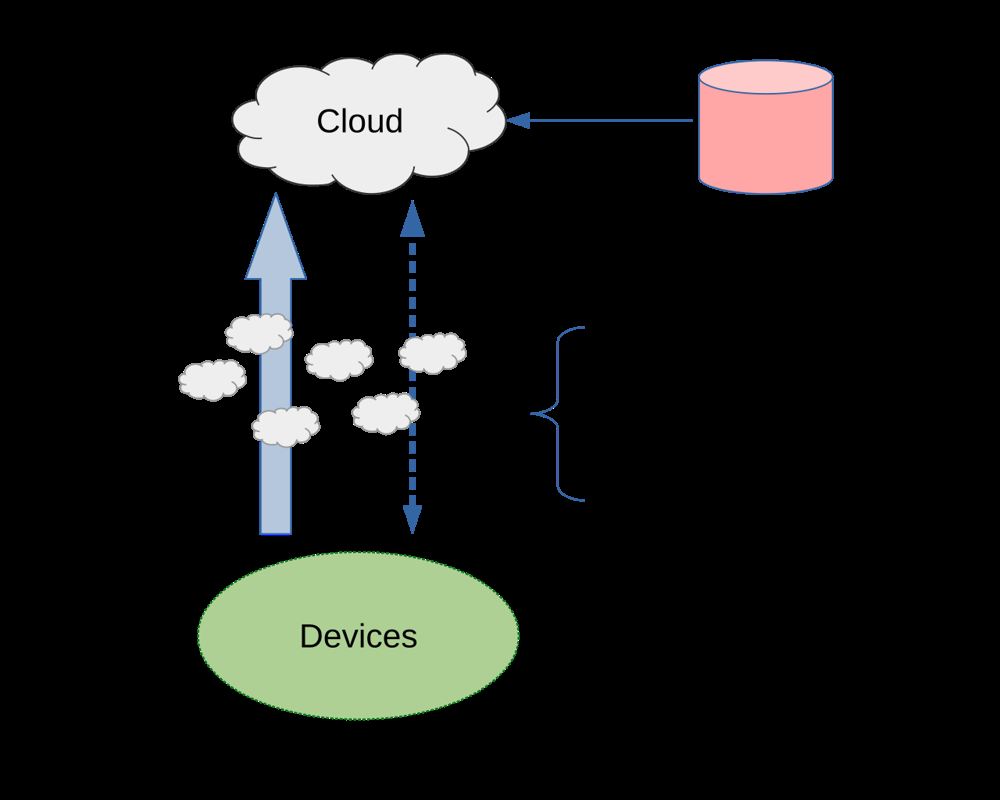

Edge computing paradigm: AI processing is pushed from the cloud to distributed edge nodes closer to devices, reducing round-trip data transfers

.

1. Market Size and Growth Forecasts

Global Market Overview: The global Edge AI market has been growing rapidly and is projected to continue on a steep upward trajectory through 2026. In 2023 the market was estimated around $17–20 billion in value

fortunebusinessinsights.com. Analysts forecast annual growth rates above 20%, driving the market to tens of billions of USD by 2026

precedenceresearch.com. For example, one analysis calculated a ~21% CAGR over the next decade, with the market expanding from $17.5B in 2023 to over $21B in 2024

precedenceresearch.com. Another predicts an even higher CAGR (~33%), indicating the potential for the Edge AI market to roughly double every 2–3 years

fortunebusinessinsights.com. While exact projections vary, double-digit growth is consistently expected, fueled by rising demand for real-time AI and IoT applications at the edge.

Regional Breakdown: North America, Europe, and Asia-Pacific dominate Edge AI adoption:

- North America (NA) – Currently the largest share. NA accounted for about 40% of the global Edge AI market in 2023 (≈$7 billion)precedenceresearch.com. This leadership is due to advanced infrastructure and major tech firms driving edge innovation. The U.S. alone had a $4.9B Edge AI market in 2023precedenceresearch.com. NA is expected to maintain strong growth into 2026, but its global share may be slightly eroded by faster-growing regions.

- Asia-Pacific (APAC) – Rapid growth region. APAC held roughly one-third of the market in 2023 and is forecast to expand dramatically. IDC projects Asia-Pacific will comprise over 40% of the global Edge AI market by 2026, overtaking other regionsprecedenceresearch.com. Massive investments in 5G, smart cities, and industrial automation across China, India, and Southeast Asia are accelerating APAC’s Edge AI uptake.

- Europe – A significant market (~20–25% share in 2023, about $5 billion) with steady growth. Europe’s Edge AI adoption is driven by Industry 4.0 manufacturing initiatives, healthcare, and government digital agendas. The Europe edge AI market was ~$5.0B in 2024 and is expected to grow ~22% annually in the latter 2020sgrandviewresearch.com. While Europe’s growth is slightly slower than APAC’s, it remains an important region with ongoing edge deployments in smart factories and cities.

Other regions (Latin America, Middle East & Africa) currently hold smaller shares but are gradually adopting edge AI as infrastructure improves. By 2026, Edge AI will be a global phenomenon, though North America and Asia-Pacific combined are likely to command ~70–80% of the market by value.

Segment-wise Trends: The Edge AI market can be segmented into hardware, software, and services, each experiencing robust growth:

- Hardware: This includes edge AI chips (GPUs, NPUs, ASICs, FPGAs), IoT devices, and on-premise edge servers/gateways. Hardware often represents the largest portion of revenues today due to the cost of specialized devices. For instance, the Edge AI hardware market was estimated at $24.2B in 2024 and projected to reach ~$55B by 2029 (17.7% CAGR)marketsandmarkets.com. However, some other analyses define a narrower hardware scope (e.g. only AI accelerator units), yielding smaller figures (one study put edge AI hardware at $0.59B in 2019 growing to $2.16B by 2026fnfresearch.com). Overall trend: hardware sales are steadily rising as more devices (from cameras to vehicles) are equipped with AI capabilities. The growth is moderate relative to software, since hardware costs tend to decrease over time even as unit volumes increase.

- Software: This refers to AI models, frameworks, and platforms running at the edge. Edge AI software is the fastest-growing segment, albeit from a smaller base. In 2022, software accounted for over 50% of edge AI revenue sharegminsights.com, reflecting the value of AI algorithms and solutions delivered to edge deployments. Forecasts show edge AI software expanding at ~25–30% CAGR. For example, edge AI software is projected to grow from ~$1.9B in 2024 to $7.2B by 2030 (24.7% CAGR)marketsandmarkets.com. Drivers include the proliferation of pre-trained AI models for edge, optimization toolchains (e.g. TensorFlow Lite, PyTorch Mobile), and “edge-to-cloud” software platforms that simplify deploying AI across distributed devicesgminsights.com.

- Services: As companies adopt edge AI, demand is rising for integration services, consulting, and ongoing support. The services segment (system integration, deployment, managed services) is expected to expand at ~26–27% CAGR through 2026gminsights.com. Service growth is high because implementing edge AI can be complex – organizations often need help retrofitting legacy systems, managing distributed devices, and ensuring security. Many lack in-house expertise, creating a market for specialists who can train staff, integrate edge solutions with cloud and IT systems, and maintain edge deployments. By 2026, services will constitute a significant share of Edge AI spending as deployments scale and users seek to maximize value from their investments.

Market Growth Drivers: Several trends underpin the strong growth outlook through 2026:

- Explosion of IoT Data: The surge of IoT sensors and devices is generating massive data streams that are impractical to send entirely to the cloud. Edge AI analyzes this data locally to enable real-time insights. For example, a modern airliner generates terabytes of sensor data daily, which “only Edge AI makes it possible to fully utilize” by analyzing locally and only sending essential results to the cloudadvian.fiadvian.fi.

- 5G Rollout: The global deployment of 5G networks (projected 400M+ 5G connections in Asia Pacific by 2025nextmsc.com) complements edge computing. 5G’s low latency and high bandwidth support connecting many edge devices and enable new applications (e.g. AR/VR, V2X communications) that require on-site AI processing to meet latency requirementsprecedenceresearch.com. This symbiosis of 5G and Edge AI is accelerating adoption in densely connected environments.

- Enterprise Digital Transformation: Industries are investing in AI to automate and improve operations. Edge AI often provides the only feasible way to deploy AI in scenarios where constant cloud connectivity is costly, unreliable, or too slow. The push for smarter factories, stores, cities, and vehicles is leading organizations to adopt edge AI solutions for immediate, locally-relevant AI insights.

- Privacy and Security Considerations: Regulatory and consumer concerns about data privacy are encouraging on-device data processing. Keeping sensitive data (e.g. camera feeds, patient vitals) at the edge can help comply with data protection laws and reduce exposure of personal datadeveloper.nvidia.com. This trend, along with emerging regulations like the EU’s AI Act (a legal framework for trustworthy AIdigital-strategy.ec.europa.eu), is prompting companies to process more data on-premises via edge AI, boosting market growth for secure edge solutions.

In summary, the Edge AI market is on track for robust expansion through 2026 across all regions and segments. North America and Asia-Pacific will lead in scale, while software and services see especially rapid growth. These figures underscore a broader shift: by 2026 edge AI will transition from early adoption to mainstream deployment, becoming a foundational technology across industries.

Table: Estimated Edge AI Market Size by Region (USD billions)

| Region | 2023 Market Size | 2026 Projected Market Size* | Growth Drivers |

|---|---|---|---|

| North America | ~$7.0precedenceresearch.com | ~$12–15 (est.) | Early adopter of edge tech; strong IT infrastructure; autonomous vehicles, smart cities demand. |

| Asia-Pacific | ~$5.5–6.0 (est.) | ~$12+ (est.) | Fastest growthprecedenceresearch.com driven by 5G rollout, smart city investments, large IoT deployments. |

| Europe | ~$4.5–5.0 (est.) | ~$8–9 (est.) | Industry 4.0 manufacturing, EU digital initiatives; focus on privacy-compliant AI at edge. |

| Rest of World (LatAm, MEA) | ~$1–2 (est.) | ~$3–4 (est.) | Gradual adoption as infrastructure improves; smart infrastructure and telecom driving initial projects. |

| Global Total | ~$17.5–20precedenceresearch.comfortunebusinessinsights.com | $30+ (est.) | >20% CAGR expected, as Edge AI becomes ubiquitous across devices and industries by 2026. |

Estimated 2026 values are extrapolated from current CAGR forecasts and may vary by source. Asia-Pacific is expected to capture the largest share by 2026

, with North America close behind.

2. Key Technological Advances

Recent technological developments in Edge AI hardware and algorithms are significantly improving performance and fostering wider adoption. Optimizations at both the chip level and the software level are enabling powerful AI inference on smaller, power-constrained devices – a crucial factor in bringing AI to the edge.

Advances in Edge AI Chips: Semiconductor innovation has delivered a new generation of AI accelerators tailored for edge devices. These chips focus on maximizing AI performance per watt in a compact footprint:

- Specialized AI Processors: Companies like NVIDIA, Google, Apple, Qualcomm, and emerging startups have developed processors specifically for edge AI. For example, NVIDIA’s Jetson Orin (launched 2022–2023) is a system-on-module boasting up to 275 TOPS (trillions of operations per second) of AI performance in a palm-sized form factorelectropages.com. This represents an 8× increase over the previous generation, effectively delivering server-class AI compute at the edge. Such power enables running sophisticated deep learning models locally on robots, cameras, and vehicles that could previously only run in data centers.

- Efficiency through ASICs and NPUs: Application-specific integrated circuits (ASICs) and neural processing units (NPUs) are being embedded in devices for dedicated AI tasks. For instance, smartphone chips now include NPUs for on-device speech recognition, and security cameras include vision AI ASICs. Apple’s acquisition of Xnor.ai – a startup known for ultra-low-power edge AI – is an example of this trend. Apple integrated Xnor’s efficient edge image recognition technology into iPhones and webcams to enable AI features without cloud dependencyglobenewswire.com.

- Improved Semiconductor Technologies: Hardware manufacturers are leveraging smaller nanometer process nodes and 3D chip architectures to enhance performance. Shrinking chip fabrication from 14nm to 7nm and below increases transistor density, allowing more AI computing capability in the same power envelope. Additionally, new packaging techniques (3D stacking, chiplets) improve data bandwidth between memory and compute, critical for AI workloadswevolver.com.

- Edge TPUs and AI Co-processors: Cloud providers have also built dedicated edge AI chips. Google’s Edge TPU is designed for fast inference on vision models with minimal power draw. In 2024 Google launched Edge TPU 3.0, achieving faster inferencing and lower latency for smart city, retail, and healthcare edge applicationsprecedenceresearch.com. These purpose-built accelerators can be embedded in IoT gateways or small devices, dramatically boosting their AI capabilities.

- Benchmark Example: The cumulative result of these advances is that edge devices can now run complex deep neural networks in real time. A 2023 edge AI module can perform tasks like image classification or object detection 50–100× faster than models on 2016-era hardware, while staying within mobile power budgets. This leap in localized processing power is a key enabler for autonomous cars, drones, and other AI-driven edge systems.

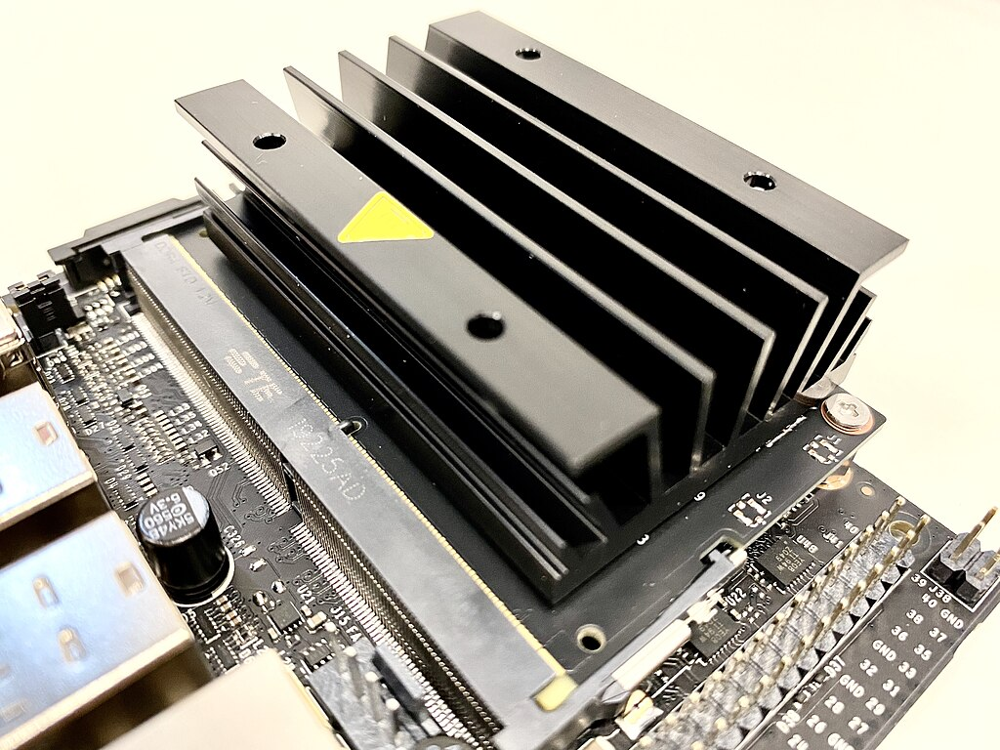

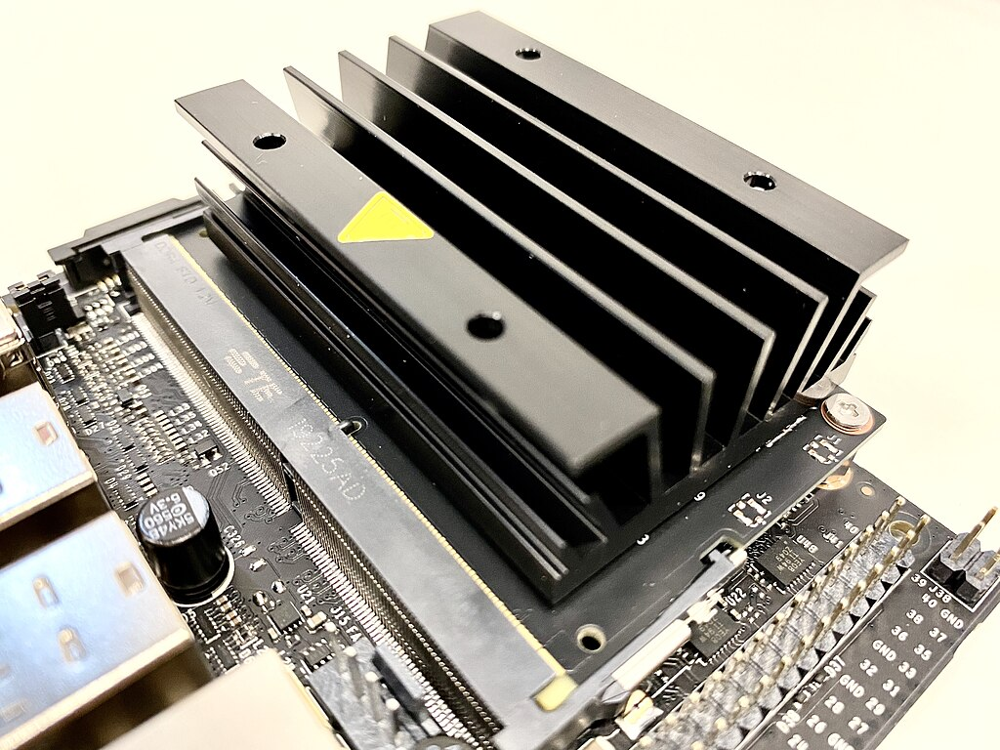

Example of Edge AI hardware – NVIDIA Jetson module with onboard GPU and heat sink. Modern edge AI boards pack powerful neural compute engines in a small form factor, enabling high-performance AI on devices outside data centers.

Optimized Edge AI Algorithms: Alongside hardware, researchers and engineers have made great strides in adapting AI algorithms to run efficiently at the network edge:

- Model Compression: Techniques like quantization, pruning, and knowledge distillation are widely used to shrink AI models for edge deployment. By reducing numerical precision (e.g. 32-bit weights to 8-bit or lower) and removing redundant neurons, models require far less memory and computation with minimal accuracy loss. Co-design of hardware and software is crucial – chips are now optimized for lower-bit arithmetic, and in turn models are optimized to use those capabilitieswevolver.com. This co-optimization can improve energy efficiency by orders of magnitude, making it feasible to run deep learning on battery-powered deviceswevolver.com. Example: A neural network compressed via quantization might run on an edge device using 1/4 the memory and compute, yet deliver almost the same accuracy – a breakthrough for deploying vision or NLP models on small edge hardware.

- TinyML: A movement toward extremely lightweight ML, TinyML, has enabled AI on microcontrollers and sensors. TinyML models are often just kilobytes in size and can operate on milliwatt-class processorswevolver.comwevolver.com. This opens up new use cases: always-on analytics in IoT sensors, wearables, and appliances. For instance, a vibration sensor with a TinyML model can detect equipment anomalies on the spot, or a smartwatch can continuously analyze heart rhythm without offloading data. By 2026, we expect TinyML techniques to further mature, allowing sophisticated AI (e.g. simple image or voice recognition) to run on ubiquitous, ultra-low-power devices that previously had zero AI capability.

- Federated and Distributed Learning: To train and update AI models across edge devices without centralizing data, federated learning has emerged as a key approach. Federated learning allows devices to collaboratively learn a shared model while keeping raw data local (only model updates are aggregated centrally). This addresses privacy and bandwidth issues and leverages the diversity of edge data. It’s particularly relevant for applications like predictive text on smartphones or anomaly detection on distributed sensors. Edge AI systems increasingly use federated learning to improve models in the field – for example, training a healthcare monitoring AI across multiple hospitals’ edge devices without exposing patient datawevolver.com. By 2026, federated learning and related privacy-preserving techniques (like on-device training and differential privacy) are expected to be standard practice for many edge AI deployments, continuously improving AI performance securely.

- Edge-Oriented AI Frameworks: The software ecosystem has evolved with frameworks tailored to edge constraints. Tools such as TensorFlow Lite, PyTorch Mobile, ONNX Runtime, and OpenVINO provide optimized libraries for mobile CPUs, GPUs, and NPUs. They handle tasks like neural network quantization, acceleration, and device-specific optimizations automatically, making it much easier for developers to deploy AI on edge hardware. Moreover, major cloud platforms (AWS Greengrass, Azure IoT Edge, Google Cloud IoT) now offer end-to-end support for edge AI, from training in the cloud to packaging models and pushing them to edge devices. This “cloud-to-edge” pipeline integration simplifies deployment of AI across thousands of edge nodesgminsights.com, ensuring that technological advances in one domain (e.g. a new model or update) can be quickly propagated to all devices.

- Real-Time and Adaptive AI: Edge AI algorithms are being designed for real-time responsiveness and adaptability. Unlike cloud AI that might run in batches, edge AI often needs to make split-second decisions (e.g. a car detecting a pedestrian). Recent advances in model architectures (like spiking neural networks or transformer models optimized for streaming data) and scheduling techniques allow consistent low-latency performance on edge devices. Additionally, some edge AI systems can now adapt on the fly – e.g. selectively simplifying the AI model when battery is low or data link is weak, and then scaling up when resources allow. Such dynamic AI ensures robust operation in the variable conditions typical at the edge.

Impact on Performance and Adoption: These tech advances collectively boost Edge AI performance and reliability, making adoption far more attractive:

- Higher Performance: Tasks that once required cloud-scale processing can now be done locally. For example, an edge AI chip today can analyze a 4K security camera feed in real time with advanced analytics (object detection, facial recognition) on-site. This performance gain means better user experiences – devices respond instantly without cloud latency. It also enables entirely new edge applications (e.g. drones doing onboard vision-based navigation).

- Energy Efficiency: Optimized chips and models use less power, which is critical for mobile and remote devices. Longer battery life and lower heat generation mean edge AI can be embedded in more places (from wearables to sensors running on solar power). Efficient edge AI is especially important for adoption in IoT, where devices might need to run for years unattended. Continuous improvements in energy efficiency (through both hardware and algorithm tweaks) are reducing one of the key barriers to edge AI deployment.

- Cost Reduction: As edge AI hardware becomes commoditized and models become lighter, the cost per device comes down. In addition, processing data on the edge can save considerable cloud compute and data transfer costs. Businesses see ROI in deploying edge AI because it can lower ongoing connectivity expenses and enable offline functionality. The expanding availability of affordable development boards and accelerators (like NVIDIA Jetson Nano, Google Coral, Intel Movidius, etc.) is also driving experimentation and adoption in a wider community of developers and smaller companies.

- Improved Privacy & Security: Technological advances allow more data to stay on the device. This mitigates privacy concerns and reduces exposure to breaches (since less sensitive data leaves the device). Secure enclaves in edge chips and better encryption further protect on-device inference. Enhanced privacy through edge processing is a selling point, especially in healthcare, smart home, and automotive sectors where trust and compliance are crucial.

- Reliability and Autonomy: By not depending on constant cloud access, edge AI systems can function even in offline or poor connectivity scenarios. For instance, an autonomous vehicle or a factory robot with on-board AI can continue operating if the network cuts out. This autonomy is vital for mission-critical applications and is a direct result of having powerful, self-sufficient AI capabilities on the edge. The result is greater adoption in environments where connectivity is a challenge (rural areas, industrial sites, etc.).

In summary, cutting-edge chips and smarter algorithms are converging to make Edge AI faster, smaller, and more efficient than ever. By 2026, ongoing R&D (including emerging areas like quantum edge computing and explainable AI techniques

) will further push the envelope, likely yielding edge devices that can run even complex generative AI models locally. This continual tech evolution is a driving force in the widespread uptake of Edge AI solutions.

3. Key Application Areas and Examples

Edge AI is being adopted across a wide range of industries, bringing AI-driven intelligence to everything from factory floors to city streets and homes. Below we identify major sectors leveraging Edge AI, with real-world examples of use cases, along with the benefits and challenges encountered in each.

Manufacturing and Industrial IoT

Use Cases: Factories and industrial sites are embracing Edge AI for automation and quality improvement. A prime example is machine vision for quality control: high-speed cameras on production lines use on-device AI to inspect products in real time. This Edge AI system can “detect even the smallest quality deviations that are almost impossible to notice with the human eye”

. Defective items are flagged or removed instantly, reducing waste and ensuring consistency. Other manufacturing uses include: predictive maintenance (edge AI analyzes vibration/temperature data from machines to predict failures before they happen), robotics (AI-guided robots and cobots making assembly decisions locally), and safety monitoring (e.g. detecting if a worker is too close to a hazardous machine and triggering an immediate shutdown).

Benefits: Edge AI in manufacturing boosts efficiency and uptime. By processing sensor data locally, equipment anomalies or quality issues are caught in milliseconds rather than minutes:

- Reduced Downtime: Predictive maintenance via edge AI helps avoid unplanned equipment failures. Local AI models on machines can detect subtle pattern changes and alert maintenance crews much faster than remote cloud analysis. This leads to higher equipment availability and cost savings.

- Improved Quality & Yield: As noted, vision systems with Edge AI can inspect 100% of products at full line speed, ensuring no defect goes unnoticed. This improves product quality and customer satisfaction. Automated edge inspection also means humans are freed from tedious visual QC tasks.

- Low Latency Control: In industrial automation, decisions often must be made in microseconds (e.g. a robotic arm adjusting its path). Edge AI provides the necessary low latency – the control loop stays on the factory floor rather than incurring network delays. This allows real-time process optimization and adaptive manufacturing systems.

- Data Cost Savings: Factories generate huge data streams from sensors. Processing data on-site means only pertinent summaries or anomalies are sent to the cloud for logging. This saves bandwidth and cloud storage costs, which is significant given the scale of IIoT data.

Challenges: Implementing Edge AI in manufacturing does come with challenges:

- Integration with Legacy Systems: Many industrial environments have older machinery and control systems. Integrating modern edge AI devices with legacy equipment (PLC controllers, SCADA systems) can be complex. Ensuring new AI systems communicate properly with existing production line controls often requires custom engineering.

- Physical and Environmental Constraints: Factory floors can be harsh (heat, vibration, dust). Edge devices must be ruggedized. Issues like mounting hardware near machines, providing reliable power and network in industrial settings, and protecting devices from interference are non-trivial. For instance, ensuring “ease of installation and physical access control” for edge panels and gateways has been a key focus in recent industrial Edge AI solutionsroboticstomorrow.com.

- Data Security: As operations become connected, manufacturers face IT/OT convergence issues. Edge AI devices need to be secure to prevent tampering or cyber-attacks that could disrupt production. Proprietary process data handled by edge AI also needs protection from espionage or leaks.

- Skill and Culture Gaps: There can be resistance or lack of expertise in traditionally mechanical/electrical engineering-dominated manufacturing teams when introducing AI. Upskilling staff or hiring new talent versed in AI/ML and edge computing is a hurdle. Additionally, some workers may be concerned about automation replacing jobs, requiring thoughtful change management.

Case Study: A large automotive plant implemented an edge AI vision system for paint quality inspection. The system immediately flagged microscopic paint defects. Benefit: scrap rate dropped by 15% in the first quarter, as flawed parts were fixed earlier. Challenge: initially, the AI system had false positives that slowed the line. Engineers had to fine-tune the model on-site (a process requiring ML expertise) to align with real-world conditions. Once resolved, the system delivered consistent results and workers came to trust its judgments, using it as a tool to enhance (not replace) their own inspections.

Healthcare and Medical Devices

Use Cases: In healthcare, Edge AI is applied in patient monitoring, diagnostics, and medical device automation. Hospitals are deploying edge AI to continuously monitor patient vitals using connected devices – for example, wearable ECG patches analyzing heart rhythms in real time and alerting staff of arrhythmias, or smart cameras in hospital rooms detecting if a patient has fallen. In one example, U.S. hospitals already have 10–15 edge devices connected per bed, and it’s estimated that by 2025, 75% of medical data will be generated at the edge

. Edge AI is also used in imaging equipment: MRI and CT scanners with built-in AI can automatically flag suspicious lesions on images right as the scan is happening, helping radiologists prioritize cases. Another emerging area is surgical assistance – AI-enabled surgical tools can provide real-time guidance (e.g. highlighting anatomy or tumor margins on a surgeon’s AR display) without needing an internet connection in the operating room.

Benefits: Edge AI offers tremendous advantages in healthcare settings where every second and every byte of privacy counts:

- Real-time Decision Support: Medical insights are available immediately at the point of care. For instance, an edge AI on a vitals monitor can alert clinicians of a patient’s deterioration within seconds, rather than waiting for data to upload and be analyzed in the cloud. This speed can save lives in critical care and emergency situations. One source notes that real-time data analysis via edge AI can improve emergency room monitoring and even provide instant feedback during surgery to prevent errorsvolersystems.comvolersystems.com.

- Enhanced Privacy Compliance: Patient data (imaging, vital signs, medical records) often falls under strict privacy laws (HIPAA, GDPR). Edge processing keeps data locally on the device or hospital network, minimizing transmission of Protected Health Information (PHI) to external serversvolersystems.com. This reduces legal risk and patient privacy concerns. Doctors are more willing to use AI when they know sensitive data isn’t leaving the premises.

- Operational Resilience: Hospitals cannot rely on cloud connectivity for mission-critical systems. Edge AI ensures that even if internet access is lost, devices like infusion pumps or diagnostics tools powered by AI continue functioning. This local autonomy is crucial for healthcare facilities in remote or bandwidth-limited locations (rural clinics, mobile ambulances) to benefit from AI.

- Cost and Bandwidth Savings: Transmitting large medical images or continuous high-resolution patient telemetry to the cloud is bandwidth-intensive and expensive. Processing those at the edge drastically cuts down data volumes. One study pointed out that edge AI can substantially lower the costs of data gathering and transmission in medical devicesvolersystems.com. Only salient results (e.g. an alert or compressed summary) are sent over the network.

Challenges: While promising, healthcare Edge AI faces unique hurdles:

- Regulatory Approval: Medical AI, especially when deployed on devices, often requires regulatory clearance (FDA, CE mark). Any change in the AI algorithm could necessitate re-approval. This makes rapid iteration difficult and requires rigorous validation of edge AI solutions. Regulators also demand explainability; one challenge cited is getting edge AI to describe its reasoning to support clinical validationvolersystems.com.

- Data Quality and Volume: Edge AI devices must be fed high-quality data. If a wearable sensor has noise or a camera has poor lighting, the on-device AI may misinterpret signals. Ensuring edge-collected data is accurate and developing robust models that can handle variability is an ongoing challengevolersystems.com. There’s less oversight once deployed, so these devices need to be exceptionally reliable.

- Integration in Clinical Workflow: Doctors and nurses already use many systems. Introducing new edge AI devices means they must seamlessly integrate with electronic health records (EHR) and alerting systems to be effective. Otherwise, they risk alarm fatigue or being ignored. Gaining user trust is another aspect – clinicians might be skeptical of AI recommendations. It takes time and training for medical staff to grow comfortable working alongside AI tools and to design workflows that make the best use of them.

- Ethical and Legal Concerns: If an edge AI system makes a wrong diagnosis or misses an alert, liability is a concern. Manufacturers and healthcare providers are figuring out who is responsible in such casesvolersystems.comvolersystems.com. Ethically, using AI for patient care raises issues of informed consent (patients should know if AI is involved in their treatment) and bias (AI models must be trained on diverse data to work for all patient populations). These factors can slow down adoption until thoroughly addressed.

Case Study: A healthcare startup developed an edge AI stethoscope – a digital stethoscope that uses an onboard AI model to detect heart murmurs and lung abnormalities as the doctor listens. Benefit: In trials, it helped general practitioners identify subtle cardiac issues with 87% accuracy, matching specialist-level diagnostic ability on certain conditions. The AI runs on the device itself, giving an instant “second opinion” to the doctor during the exam. Challenges: Gaining physicians’ acceptance was initially difficult; they were concerned about trusting a “black box.” The developers addressed this by making the AI’s output explainable (it would highlight which sound patterns led to the suggestion) and by providing training sessions. Regulatory approval took time, but the device has since been approved in multiple countries. It’s now being used in remote clinics, where it effectively brings cardiology expertise to underserved areas via Edge AI.

Transportation and Smart Mobility

Use Cases: The transportation sector, including automotive and public transit, is at the forefront of Edge AI deployment. Autonomous vehicles (self-driving cars, drones, autonomous delivery robots) are a marquee example: they rely on a suite of edge AI systems (computer vision, sensor fusion, path planning) running onboard in real time to make driving decisions. A self-driving car is essentially a data center on wheels, ingesting camera, LiDAR, and radar data and requiring instantaneous analysis – “decisions must be made in the blink of an eye” with no time to consult the cloud

. Edge AI is also used in advanced driver-assistance systems (ADAS) in human-driven cars (for collision avoidance, driver monitoring, etc., all computed locally in the vehicle).

Public transportation and city traffic management are adopting Edge AI too. Examples: smart traffic lights with embedded AI cameras that adjust signals based on vehicle and pedestrian flow; AI sensors in trains that monitor tracks and equipment in real time for faults; and edge AI on buses for passenger counting or safety surveillance. Connected vehicles (V2X) also use edge computing at roadside units to do things like local hazard detection and then broadcast alerts to nearby cars within milliseconds.

Benefits: In transport, Edge AI’s ultra-low latency and autonomy bring critical benefits:

- Safety: For autonomous or semi-autonomous vehicles, edge AI is the only way to meet safety requirements. A car cannot depend on a remote server to decide when to brake for an obstacle – the latency would be deadly. Onboard AI processes sensor data in real time (often within a few milliseconds), enabling immediate reactions (braking, steering adjustments). This local processing is literally life-saving. Even in connected vehicle scenarios, local edge nodes ensure fail-safe operation if communication lags. Many modern cars now have features like automatic emergency braking and lane-keep assist powered by edge vision AI, which have reduced accident rates by reacting faster than human drivers.

- Reduced Network Dependency: Vehicles often traverse areas with poor or no connectivity. Edge AI allows continuous operation regardless of network availability – an autonomous drone can navigate off-grid; a shipping truck can use AI for engine diagnostics in remote regions without waiting to send data to cloud. This independence is crucial for reliability and is a direct result of robust edge computing.

- Traffic Efficiency: Edge AI in infrastructure (e.g. smart traffic cameras) helps optimize traffic flow by analyzing local conditions. A city that implements edge AI at intersections can adapt traffic light timing dynamically to current traffic, reducing congestion. It can also handle transient events – for example, detecting an accident on a street via edge cameras and immediately diverting traffic by changing digital signs or lights, all without a round-trip to a central server. This leads to smoother transportation systems and less commuter delay.

- Passenger Experience: In public transit, edge AI can improve experiences – like intelligent buses that adjust climate control or provide personalized onboard information based on who’s riding (without sending personal data to the cloud). Surveillance cameras with edge AI can increase security by identifying suspicious activities in real time, making riders feel safer.

Challenges:

- Safety & Validation: While edge AI enables safety features, it must itself be extremely safe. Validating autonomous vehicle AI under all possible scenarios is an enormous challenge. Edge AI systems in transport need exhaustive testing to ensure they handle edge cases (pun intended) – unusual road conditions, rare events, etc. The liability for failure is high, so proving reliability to regulators (and the public) is a hurdle that has slowed the rollout of full self-driving systems.

- Computing Constraints: Autonomous vehicles pack supercomputer-like capabilities, but they are still limited by power and thermal constraints in a car environment. Balancing the computational heavy lifting of AI (for vision, radar processing, etc.) with the need to fit in a car’s power budget and heat dissipation capacity is tough. It requires specialized automotive-grade AI hardware and very efficient software. Even then, vehicles might not run the most complex AI models simply due to resource limits – engineers must optimize models to fit within memory and processing ceilings.

- Cost: Equipping vehicles or infrastructure with advanced edge AI hardware adds cost. Lidar, cameras, and AI chips can increase a car’s price significantly. Although costs are coming down, this can impede adoption in lower-end vehicles or in cities with tight budgets for upgrading infrastructure. There’s a trade-off between sophistication of edge AI and economic viability, especially in public sector deployments.

- Regulatory and Ethical Issues: Self-driving cars raise ethical dilemmas (decision-making in unavoidable crash scenarios, for instance) and regulatory questions (how to certify an AI driver). Even simpler uses like AI traffic cameras must be deployed carefully to respect privacy (e.g. avoiding storing identifiable data). Some jurisdictions have strict rules that can hamper more invasive uses of AI (like facial recognition in public). Navigating the regulatory landscape is part of the challenge for edge AI in transportation.

Case Study: Autonomous Shuttle: A city pilot-tested autonomous electric shuttles to connect transit hubs. Each shuttle uses multiple Edge AI systems – object detection to spot pedestrians, path planning AI for routing, and diagnostic AI to monitor vehicle health. Benefits: During the pilot, the shuttles successfully navigated complex urban environments and had zero accidents in 20,000 miles of operation, thanks in large part to fast-reacting edge AI (e.g. instantly stopping for jaywalkers that human drivers often miss). The shuttles also collected traffic pattern data that the city later used to adjust signal timings, reducing bus travel times by 5%. Challenges: On particularly complex left-turns in heavy traffic, the shuttle’s AI grew overly cautious, causing delays. Engineers had to refine the algorithm logic. Additionally, public riders were initially nervous about a vehicle with no human driver – the city added an “AI concierge” voice that explained the shuttle’s actions (“Yielding for pedestrian”) to reassure passengers. This mix of technical tuning and human factor adjustments was needed to move the project forward. The pilot’s success is now leading to a broader deployment of these edge-AI-driven shuttles.

Smart Homes and Consumer Electronics

Use Cases: In the smart home domain, Edge AI has become a key differentiator for devices like smart speakers, security systems, appliances, and personal electronics. A common example is voice assistants (Amazon Alexa, Google Assistant, Apple Siri) – originally they sent every voice command to the cloud for interpretation, but now they increasingly perform on-device speech recognition for wake words and simple commands. New smart speakers and phones can recognize “Hey Alexa” or “Hey Siri” locally and even carry out certain requests (setting a timer, controlling local devices) without cloud round-trip. Similarly, AI-powered security cameras for home use are embedding edge AI chips to detect people, pets, or packages at the door. This way, they can send intelligent alerts (“Person at front door”) rather than raw video, and they continue functioning/privacy when internet is down. Other examples include smart thermostats that learn your schedule with on-device AI, washing machines that detect fabric types and dirt levels with optical sensors to optimize the wash cycle, and even vacuum robots using edge AI vision to identify obstacles (like avoiding pet waste – a famously advertised use of onboard AI!).

Benefits: For consumers, Edge AI in home devices yields tangible improvements:

- Privacy: Perhaps the biggest selling point – keeping personal data in-home. A smart camera that does AI processing internally means video of your living room isn’t constantly streaming to the cloud. Likewise, an AI voice assistant that handles commands on-device (like Apple’s latest Siri processing on iPhones) means your voice recordings stay private. This addresses one of the main concerns consumers have with smart devices. As one commentary noted, edge AI devices avoid turning your home into a “creepy surveillance zone” for othersblog.heycoach.inblog.heycoach.in. Instead, data like audio and video can be analyzed locally and only non-sensitive results (e.g. “lights turned off at 10:00 PM”) are sent out.

- Responsiveness: Edge AI cuts lag. Lights turn on the moment you ask. Doorbell cameras can chime instantly when someone is recognized, instead of waiting for cloud confirmation. This snappiness makes smart home interactions feel more natural and reliable. It also enables offline functionality – you can still control your smart home during an internet outage (ask the local voice assistant to lock the doors, and it will).

- Bandwidth and Cloud Cost Savings: For users with bandwidth caps or slow internet, edge processing is a savior. Streaming video inside the home rather than to cloud saves data. A single smart camera can use dozens of GB per month if cloud-uploading continuously. Edge AI that sends only relevant clips or messages dramatically reduces this. It also means the user (or device maker) doesn’t have to pay for as much cloud storage or processing power, potentially lowering subscription costs for cloud services associated with devices.

- Customization and Control: Enthusiast users appreciate that with edge processing, they have more direct control over the AI’s behavior. Some systems allow running custom AI models on local hubs (e.g. open-source home automation hubs that can run face recognition to disarm security when a family member is recognized). This flexibility caters to power users and fosters innovation in the smart home space by leveraging community-developed edge AI models.

Challenges:

- Device Constraints: Many smart home devices are small and inexpensive, so adding powerful hardware isn’t always feasible. Manufacturers must balance cost, size, and power. For instance, a $50 security camera can’t include an expensive GPU – it may have to rely on an efficient ASIC or a less powerful processor, which limits the complexity of AI it can run. Edge AI models for such devices must be highly optimized. There’s often a trade-off between accuracy and model size that engineers grapple with.

- Interoperability: The smart home ecosystem is fragmented. Different brands and standards may not play nicely. If each device has its own edge AI, getting them to share insights or coordinate can be hard (e.g. camera from Brand A identifying a person and telling Brand B’s alarm system to disable). Efforts like the Matter protocol are trying to unify IoT communication, but achieving seamless integration remains a challenge. Without cloud as an intermediary, peer-to-peer coordination relies on local networking that might be complex to set up.

- Security: Ironically, while edge AI improves privacy, the devices themselves can be targets for hackers if not properly secured. A vulnerability in a smart camera could allow someone to access its video feed or even its on-device AI data. Lack of standard security protocols across IoT devices is a known issueblog.heycoach.in, making some devices an “easy target for cybercriminals.” Manufacturers vary in their security diligence, and many consumers don’t regularly update device firmware (if updates exist)blog.heycoach.in. As edge devices proliferate, ensuring they are not the weak link in home network security is critical.

- User Awareness and Trust: Some consumers are unaware of the benefits of edge AI and might not actively seek it out. It’s an education challenge for companies to market privacy and offline capability as differentiators. Moreover, if an edge AI misidentifies something (say, the smart lock fails to recognize the homeowner and locks them out), users could lose trust quickly. There is little tolerance for “smart” devices making “dumb” mistakes at home, so the edge AI must be well-trained for the context or provide fallback options.

Case Study: Smart Doorbell Camera: A popular home security brand updated its doorbell camera to include an edge AI chip for person detection. Benefit: Instead of sending every motion event to the cloud (which often resulted in false alarms from tree branches or passing cars), the device itself now distinguishes people from other movement. Homeowners receive far fewer notifications, and when they do, the alerts are more meaningful (“Person spotted at front door” with a snapshot). The device also has an option to recognize familiar faces (friends/family) on-device, so it can, for instance, announce them by name on connected speakers – all without storing those faces in any cloud database. Challenges: During development, achieving high accuracy in varied outdoor conditions (night, rain, shadows) was tough with the limited processing available. Early models missed people wearing certain clothing or in low light. The company addressed this by collecting a more diverse dataset and incrementally updating the model (which they could do via firmware updates to the device). They had to walk a fine line in communicating the feature: explicitly explaining that the camera does AI analysis locally to alleviate privacy worries, but also warning users that it’s not 100% foolproof and traditional recording is still happening as backup. Today, that edge-enabled doorbell is one of their best-selling products, indicating consumers value the smarter alerts and privacy safeguards.

Other Notable Sectors:

- Smart Cities: Edge AI is deployed in urban infrastructure – from traffic systems (as discussed) to environmental monitoring (sensors with on-board AI detect pollution spikes or noise anomalies in real time) and public safety (streetlight cameras analyzing foot traffic patterns or spotting incidents). For city governments, the benefits are improved municipal services and quicker response times, while challenges include scaling to city-wide deployments and addressing surveillance/privacy concerns in public spaces.

- Retail: Retailers use Edge AI for in-store analytics and automation. Smart shelf cameras and sensors can do footfall analysis, queue management, and even demographic analysis of shoppers locallyadvian.fi. Some stores have implemented “grab-and-go” shopping where cameras and weight sensors with edge AI track what items customers pick up, enabling checkout-free experiences (e.g. Amazon Go stores). Benefits are richer data and personalized experiences; challenges revolve around accuracy (misidentifying products or customers) and integrating with existing retail IT systems.

- Energy & Utilities: The energy sector employs edge AI in smart grids and renewable energy management. Edge devices at solar farms or wind turbines use AI to predict power output and detect faults (e.g. a wind turbine’s edge controller can flag when wind patterns indicate a storm, triggering preventative measures). Smart grid nodes use edge AI to balance load or isolate faults in milliseconds to prevent blackoutsadvian.fi. This yields a more resilient grid and efficient energy usage. The challenges here include deploying devices in sometimes remote, harsh conditions and ensuring interoperability across a wide grid network.

- Agriculture: In “smart farming,” edge AI drives innovations like autonomous farm machinery (tractors with edge vision to navigate and identify crops/weeds), drone-based crop monitoring (drone analyses images on the fly to find pest infestations), and IoT soil sensors (with TinyML models that decide when to trigger irrigation). Benefits are increased yield and reduced resource waste via precision agriculture. Challenges lie in making devices rugged, low-cost for farmers, and dealing with variable outdoor conditions that can confuse AI models.

Each of these sectors demonstrates the versatility of Edge AI – the technology is adaptable to many contexts because fundamentally, wherever there is data generated outside the cloud and a need for quick, intelligent action, edge computing and AI make a compelling pair.

Despite differences in application, some common themes emerge. Benefits generally include real-time responsiveness, improved privacy, and operational efficiency. Challenges often relate to technical integration, reliability, and trust/governance issues. As we approach 2026, ongoing advancements (discussed in Section 2) are gradually lowering the technical barriers, and increasing positive case studies (like those above) are helping industries overcome cultural and organizational hesitations. The result is a growing momentum for Edge AI adoption across virtually all sectors of the economy.

4. Future Prospects and Challenges

By 2026, Edge AI is expected to be deeply ingrained in business operations and daily life, driving significant social and economic impacts. However, several challenges – technical, ethical, and legal – will need to be addressed to fully realize its potential. In this section, we assess how Edge AI might diffuse in the near future and discuss the key hurdles and solutions on the horizon.

Projected Diffusion by 2026: All indicators suggest that Edge AI will see broad mainstream adoption by 2026, transitioning from early adopter use cases to ubiquitous deployment:

- Pervasive Edge Devices: The number of edge AI-enabled devices will explode. Estimates show the global market for edge AI hardware units growing from 2.3 billion units in 2024 to about 6 billion by 2030globenewswire.com, implying billions of new edge AI devices coming online by 2026. In practical terms, this means many of the objects in our environment – vehicles, appliances, machines, infrastructure – will have some level of on-device intelligence. Consumers may routinely interact with dozens of Edge AI devices daily (from one’s smartphone and smart home gadgets in the morning, to AI-assisted traffic systems during commute, to edge-enabled machines at work).

- Enterprise and Industrial Uptake: Edge AI will become a standard component of enterprise IT and OT (operational technology) strategies. Surveys indicate over 75% of enterprise data could be processed outside centralized data centers by mid-decadedeveloper.nvidia.com, as organizations leverage edge computing for latency and privacy reasons. Factories will normalise using AI on the shop floor, retailers in every store, and logistics firms throughout their supply chain (e.g. smart sensors on packages, AI in warehouses). The economic impact will be notable – improved productivity from edge automation, reduced downtime, and new services (like real-time analytics offerings) will contribute to GDP growth. By 2026, we could see Edge AI as a key enabler in $100s of billions of economic value across industries, through efficiency gains and new revenue streams.

- AI at the “Far Edge” for Consumers: Edge AI will also diffuse at the individual consumer level in forms that make AI more personal and ambient. This might mean AI truly “everywhere” – e.g., personal AI assistants that travel with you on wearable devices, AR glasses with onboard AI enhancing your view of the world in real time, or smart home systems that proactively adapt without sending data to cloud. The social impact here is an increase in convenience and personalization, but also an adjustment as people get used to AI-enabled objects making autonomous decisions around them. For instance, by 2026 it may be normal for your car to negotiate with a parking garage AI for a spot, or for your fridge to directly communicate with a store’s system to reorder food based on its edge AI sensing.

Social and Economic Impacts: As Edge AI diffuses, it will bring several impacts:

- Empowerment of Remote/Underserved Areas: Edge AI can function with limited connectivity, which means rural or underserved regions can benefit from AI locally. For example, telemedicine kits with edge AI diagnostics can serve villages with poor internet, or agricultural AI devices can help farmers far from urban centers. This could somewhat democratize access to AI, narrowing the digital divide because reliance on cloud infrastructure (which favors developed urban areas) is lessened.

- Job Transformation: Edge AI will automate certain tasks (like quality inspection, traffic monitoring, etc.), which might displace some jobs while creating others. The net effect by 2026 is expected to be a shift in job skill requirements: demand will grow for roles in managing and maintaining edge devices, data engineering for distributed systems, and interpreting AI outputs in business contexts. Routine monitoring jobs may decline, but new jobs will emerge in fields like “edge system operations” and “AI field technician.” Companies and governments will need to invest in retraining programs so the workforce can move into the new roles created by edge computing and AI.

- Real-time Society: With Edge AI, many processes speed up, leading to what could be termed a more “real-time society.” Services and responses that used to take minutes or hours (or required human intervention) might become instantaneous and automated. Emergency response can improve (disasters detected by edge sensors triggering immediate local alerts), customer service might be enhanced by on-premises AI systems in stores, and overall expectations for immediacy in services will rise. Economically, this may increase productivity and throughput in systems ranging from transportation (more vehicles moving efficiently) to manufacturing (faster production cycles).

- Local Data Economies: Edge AI might encourage keeping data local, which could give rise to local data marketplaces or collaboratives. For instance, city governments could aggregate insights from all city-deployed edge devices and offer that (in a privacy-safe form) to local businesses or researchers. The value of data will be realized at the edge where it’s generated, potentially changing the dynamics of the tech economy that currently centralize data in the hands of cloud giants. By 2026, we may see new business models where companies sell “edge analytics appliances” or subscriptions to on-site AI services as opposed to cloud services – influencing how tech companies generate revenue.

Technical Challenges and Solutions: Despite the optimistic outlook, several technical hurdles need addressing for seamless Edge AI adoption:

- Standardization and Interoperability: Currently, the edge AI landscape is fragmented – myriad device types, operating systems, and AI frameworks. A lack of hardware and software standards makes developing and deploying edge AI across platforms difficultsyntiant.com. By 2026, industry groups are expected to push standards for IoT and edge interoperability (like evolving the OpenFog/Edge Computing standards, or initiatives such as the Matter protocol for smart home devices expanding to AI data exchange). Standard APIs and formats for edge AI models would allow a model trained by one tool to run on various edge hardware easily. Progress here will be critical; unified frameworks (perhaps based on containerization or WebAssembly for edge) are emerging to package AI applications in a device-agnostic way.

- Scalability and Manageability: Deploying a handful of edge AI devices is one thing; managing fleets of thousands or millions is another. Organizations will need robust edge orchestration tools – think of it as “edge DevOps.” This includes remote update mechanisms, monitoring of edge AI performance, and health management. Solutions are developing, like Kubernetes-based edge orchestration or specialized IoT management platforms that handle AI models. Over-the-air (OTA) updates with delta changes and fail-safes are becoming standard to keep edge AI models up-to-date securely. By 2026, better management software and possibly AI-driven orchestration (AI to manage AI) will help maintain large-scale edge deployments, addressing the current challenge of scale.

- Resource Constraints: Edge devices will always have limits on compute, memory, and energy. While hardware advances continue, the complexity of AI models (especially with the rise of large language models and advanced deep networks) is also increasing. A challenge is ensuring edge devices can keep up. The likely solution is a combination of more efficient algorithms (like spiking neural networks or neuromorphic computing breakthroughs for edge) and hierarchical processing (i.e., simple decisions on the edge, heavy lifting in near-edge micro data centers if needed). We may see more tiered edge architectures by 2026, where extremely low-latency critical inference happens on the device, but slightly larger edge servers on premises handle medium-latency tasks, and cloud only used for non-time-sensitive analytics. This layered approach can mitigate individual device limitations.

- Security: The distributed nature of edge computing greatly expands the attack surface for cyber threats. Each device could be a potential ingress point. Already, lack of standard security in IoT is a problem (inconsistent protocols, unpatched firmware)blog.heycoach.inblog.heycoach.in. Going forward, adopting zero-trust security models at the edge is vital – every device must have strong identity, and communications should be encrypted end-to-end. Hardware security modules (secure elements) are increasingly being embedded in edge AI chips to provide tamper resistance and secure key storage. Also, techniques like secure boot, regular OTA security patches, and network segmentation for edge devices will be standard practice. By making security a first-class design parameter (and not an afterthought), many current vulnerabilities can be closed. The industry is slowly moving this way as evidenced by new IoT security regulations in some jurisdictions (e.g. requiring unique passwords, vulnerability disclosure, etc., for connected devices).

Ethical and Legal Challenges: Beyond technical issues, ethical, privacy, and legal frameworks lag behind the technology. Key challenges and anticipated solutions include:

- Privacy and Data Governance: Edge AI keeps data local, but that doesn’t automatically mean privacy is guaranteed. Devices can still collect very sensitive information (faces, voices, behaviors). There need to be policies on data retention and usage on the edge. Who owns the data on an edge device – the user, the device maker, the service provider? By 2026, we expect clearer answers: likely regulation will assert that data collected in personal contexts (home, personal device) is owned by the user, and device makers must be transparent about any data they gather. Techniques like differential privacy (adding noise to data) and on-device anonymization will be more widely used for edge AI that does need to share any data or summaries. Privacy challenges also extend to consent – users must be informed when edge AI is operating (just as CCTV cameras often have signage). We may see something like “AI inside” labels or auditory notifications, so people are aware of AI presence, akin to camera recording lights. Regulatory bodies like the EU, via the AI Act, are already stipulating transparency requirements for AI – these will apply to edge AI systems as welldigital-strategy.ec.europa.eu.

- Bias and Fairness: Edge AI models could inadvertently perpetuate biases (e.g., a security camera’s AI misidentifying people of a certain demographic more often). Solving this involves conscientious model training with diverse data and ongoing auditing of AI decisions. One benefit of edge AI is that models could be locally tuned to their environment (personalized models), but that also raises the risk of overfitting or bias to a specific setting. By 2026, it’s likely that industry standards or regulations will require bias testing for AI algorithms, and maybe even deployment of explainable AI (XAI) methods on the edge so that decisions can be interpreted if contested. For instance, if a smart door lock’s AI refuses entry, there should be a log or explanation to review. The tools for XAI on resource-limited devices are nascent, but research is active. Techniques like local interpretable model-agnostic explanations (LIME) or simplified surrogate models might be packaged with edge AI deployments.

- Legal Liability and Accountability: When an edge AI system causes harm or error, legal systems worldwide will need to determine accountability. Is it the manufacturer’s fault? The operator’s? The AI developer’s? By 2026, we’ll likely have seen landmark cases that set precedents in this area. One approach to clarify liability is through explicit standards and certifications – e.g., if an autonomous machine adheres to all standards and is certified safe, yet something goes wrong, liability might shift to the operator. Another approach is insurance mechanisms: we might see insurance products for edge AI devices (much like car insurance) become common, pricing the risk and handling payouts for incidents. Policymakers are discussing frameworks such as requiring a “human in the loop” for certain decisions, or mandating logging to trace decision processes. Solutions here will evolve as real-world situations test the boundaries of current law.

Looking Ahead: Despite challenges, the trajectory of solutions and adaptive measures gives confidence that Edge AI’s growth will remain on track. Companies, standards bodies, and governments are actively working on mitigating these challenges:

- Tech giants and startups alike are offering more edge-friendly AI development tools and pre-trained models that have privacy and efficiency built-in.

- The emergence of AI ethics guidelines and possibly legislation (like the EU AI Act, which will likely come into effect around 2025–2026) will provide clearer rules of the road, which in turn encourages responsible innovation rather than Wild West deployment.

- Collaboration between stakeholders is increasing – for instance, cities launching pilot programs with researchers to evaluate social impacts of public edge AI, or industry consortia sharing best practices on edge security.

By 2026, Edge AI will be more mature and standardized. We can expect a landscape where interacting with edge AI systems is routine and generally trusted, much like how society adjusted to smartphones over the past decade. The most successful players will be those who navigate the technical hurdles with robust engineering and address ethical/legal concerns proactively, thus winning user trust.

In conclusion, Edge AI is poised to revolutionize how and where we harness AI, bringing intelligence to the edge of the network – everywhere that people and machines generate data. Its development trends point to fast growth, fueled by tech advances in chips and algorithms that make on-device AI practical. Real-world applications across industries are already demonstrating value, from saving lives in hospitals to improving productivity in factories to adding convenience in homes. As we approach 2026, the focus will be on scaling these successes, ensuring interoperability, and doing so ethically and securely. Edge AI’s promise is a future where AI is more immediate, more private, and more embedded in the fabric of daily life – and the ongoing efforts in the tech community aim to solidify that vision.

Sources:

- Market size and regional share: Precedence Research, Edge AI Market Size, Share and Trends 2024 to 2034precedenceresearch.comprecedenceresearch.comprecedenceresearch.com; Fortune Business Insights, Edge AI Market 2023–2032fortunebusinessinsights.comfortunebusinessinsights.com; GlobeNewswire (ResearchAndMarkets), World Edge AI Market Outlookglobenewswire.com.

- Segment growth (hardware/software/services): NextMSC Reportgminsights.comgminsights.com; MarketsandMarketsmarketsandmarkets.commarketsandmarkets.com.

- Technological advances: GlobeNewswire, Edge AI Hardware Market 2025globenewswire.com; Example of Edge TPU 3.0precedenceresearch.com; NVIDIA Jetson Orin performanceelectropages.com; Wevolver Edge AI Report (on quantization/pruning)wevolver.com; Federated learning in Edge AIwevolver.com.

- Application case examples: Advian Blog (manufacturing quality control)advian.fi; NVIDIA blog (healthcare edge devices per bed, 75% data at edge by 2025)developer.nvidia.com; Voler Systems (healthcare edge AI benefits and challenges)volersystems.comvolersystems.comvolersystems.com; Advian (autonomous vehicles need edge decisions in blink of eye)advian.fi; Advian (retail video analytics)advian.fi; HeyCoach blog (privacy issues in smart home edge devices)blog.heycoach.inblog.heycoach.in; Syntiant (challenges of edge AI deployment)syntiant.com.

- Future prospects and challenges: IDC forecast via EdgeIR (317B edge computing spend by 2026)edgeir.com; Wevolver/Computer.org (accuracy-latency tradeoffs)computer.org; HeyCoach (security challenges – lack of updates, standardization)blog.heycoach.in; EU AI Act announcementdigital-strategy.ec.europa.eu; Voler (medical device edge AI challenges like explainability, liability)volersystems.comvolersystems.com.